Q&A: Barbara Olson on using a system of workplace justice to support safety

Barbara Olson, M.S., R.N., CPPS

Barbara Olson, M.S., R.N., CPPS, is a Senior Advisor with The Just Culture Company where she supports health care organizations in improving patient and worker safety. She is a Fellow of the Institute for Safe Medication Practices and a TeamSTEPPS master trainer. Olson previously served as Corporate Director of Patient Safety at HCA Healthcare and Vice President of Clinical Improvement & Healthcare Safety at Lifepoint Health. She began her career as a perinatal nurse. Olson was among the speakers at the Institute of Healthcare Improvement’s Patient Safety Congress who discussed issues related to the negligent homicide conviction of RaDonda Vaught, R.N., for a fatal medication error.

Betsy Lehman Center: The case of RaDonda Vaught has fueled debate about accountability and justice following harm due to medical errors. Many organizations look to the principles of “just culture” for guidance. Please explain what just culture is and how it applies in this case.

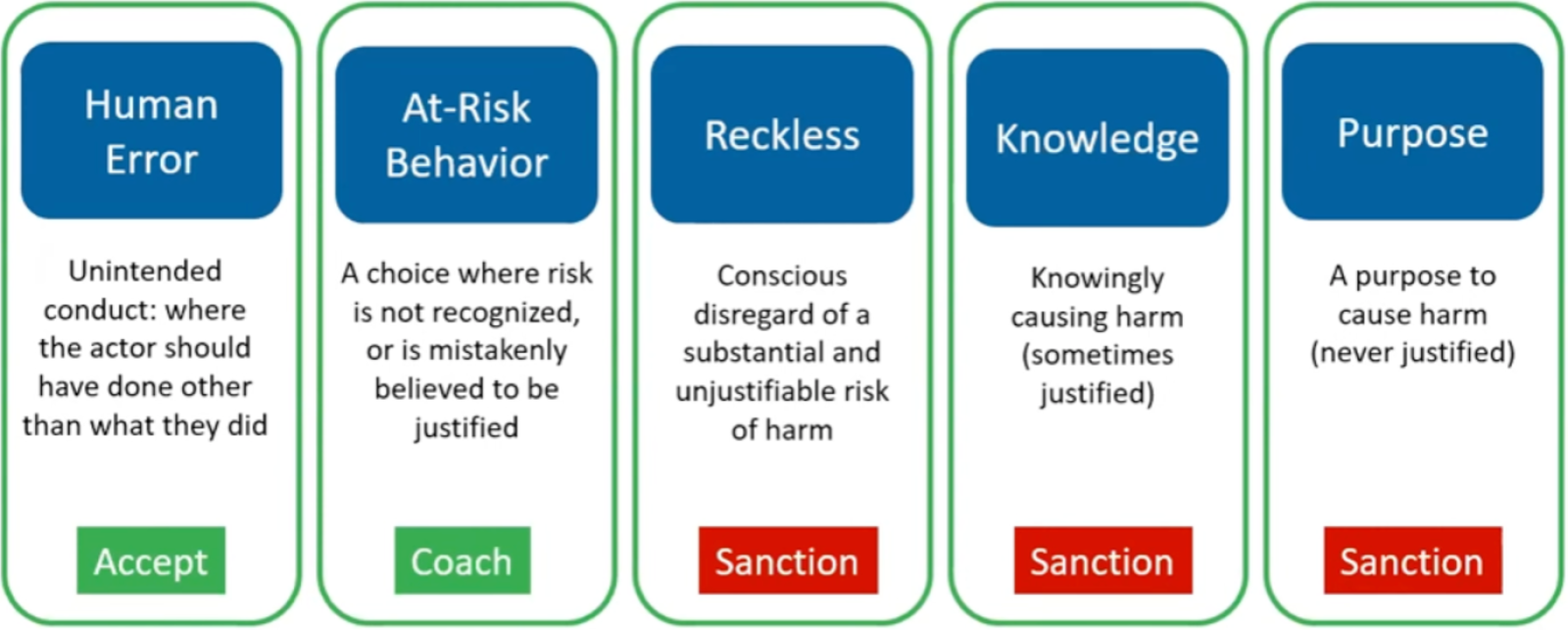

Olson: Our model of just culture refers to a system that gives organizations a way to consistently recognize, reward, tolerate, or punish conduct that occurs in the workplace across all industries. It’s a means to evaluate and respond to errors and choices that jeopardize the values of an organization. We evaluate behaviors in five categories and assign a standard organizational response based on the nature of the act. Individuals are judged for the quality of their choices, not the occurrence or severity of harm.

The Just Culture Continuum

Used with permission from The Just Culture Company © 2016

The first category in the Just Culture continuum is unintended human error. Errors are slips, trips, cognitive lapses — the things that people know how to do but simply fail to execute as they intended. Individuals who err in this way while on the job do not face disciplinary sanction in the model of just culture I’m describing.

The second category is at-risk behavior, which involves choice. These are decisions where an individual fails to recognize increased risk or mistakenly believes it to be justified. The appropriate response to at-risk behavior is twofold. Individuals should receive coaching: a candid, values-focused conversation for purposes of helping a worker see and appreciate risks associated with the choice. At the organizational level, at-risk behavior signals the opportunity to improve systems, assess workflows, or clarify values.

The Just Culture behavioral continuum extends through increasing levels of awareness and intention to an endpoint where individuals intentionally cause harm — physical, emotional, reputational or financial. There is universal agreement that these behaviors, including conscious or reckless disregard for the risk of harm, warrant discipline.

Human error and at-risk behavior are at the center of the debate about the case of nurse RaDonda Vaught.

The first two categories, human error and at-risk behavior, are at the center of the debate about the case of nurse RaDonda Vaught. Based on information available publicly, it appears Vaught made a series of human errors and at-risk behavioral choices that contributed to Charlene Murphey’s death from a wrong-drug error. Vaught’s actions have been characterized as unintentional. The question of recklessness has been raised, but it is difficult to see how she, in carrying out a series of inter-related actions in a complex system, acted with conscious disregard of substantial and unjustifiable risks that were visible to her as she rendered care.

In my experience, the severity of the outcome often skews judgment, causing acts to be labeled as reckless because we cannot imagine that anything short of recklessness could result in such jarring tragedy. A Just Culture assessment purposefully considers if an individual would be subject to the same level of disciplinary action had the consequence to the patient been different.

Betsy Lehman Center: How does the Just Culture approach to at-risk behaviors inform efforts to improve patient safety?

Olson: I go back to a concept I first encountered years ago when I heard Terry Fairbanks of MedStar Health describe the gap between work as imagined and work as performed. In health care, that gap is where most at-risk behavior lives. We’re talking about less-than-ideal practices that may seem reasonable to clinicians at the time but pose risks for patients and staff and become the norm if they’re not revealed and examined.

Those risky choices, acts or omissions are often seen as time-savers or necessary to uphold a more important value. Timeliness will often trump thoroughness, for example. Sometimes at-risk behavior is simply what clinicians do to get through the shift as best they can, using available tools and processes. Seen that way, organizations can influence how frequently clinicians feel the need to work around, to go “off script” so to speak. By recognizing the behavior and creating awareness, leaders can see the hidden risks. Ideally, they’re able to intervene before unwelcome practices become habitual.

This is where psychological safety comes in. We want workers to feel comfortable, we want to encourage them to speak up and share what’s not working — whether that’s a supply shortage, a software interface or an ambiguous order set.

An effective shift debrief is one way to surface at-risk behaviors. But organizations must first acknowledge that things will happen in the course of a routine day that are not ideal, that do not conform to standard policies and procedures. They must own the gap between work as performed in the real world and work as imagined when policies are written.

It’s even possible for at-risk behavior to be innovative — to be a good thing — but if workers aren’t able to disclose and examine these behaviors, they are just workarounds. That’s why it’s important to use a framework of what's recognized, rewarded, tolerated, or punished.

Betsy Lehman Center: On Twitter, you describe yourself as “A nurse with an engineer's mind striving for justice and driving improvement through #JustCulture.” What role does engineering play in your work on safety and justice?

Olson: I tend to think in terms of systems. To get to any measure of reliable performance, we have to understand how well the system of care understands and manages the possibility of failure.

The Vaught case provides an example of how to or how not to manage a system for safe medication administration. Let’s look at just one action in this complicated case, the nurse’s retrieval of the wrong drug. And we’ll say the failure rate — the risk that a nurse will retrieve the wrong medication from an automated dispensing cabinet — is 1 in 1,000. That’s a ballpark number engineers often use when modeling human error. We can design our system to lessen the risk of catastrophic failure from a wrong-drug retrieval error by lowering the risk of the error. That is the real work of patient safety.

We must begin by acknowledging that human error will occur, that under some circumstances, a nurse will retrieve the wrong drug. So, we apply barriers — things that prevent that undesirable event from being set in motion. And we do this as far upstream as feasible. Barriers include things like storage constraints, prohibiting certain drugs from being available outside of a pharmacy, restricting their retrieval through overrides, and keeping them sequestered in drawers with lidded boxes that open only after a clinician answers a series of questions or after a second clinician verifies the need to retrieve it. Vials can be made to feel and open differently than others, using shrink wrap and other tactile cues. These are just a few examples. The nature of those barriers differs according to the likelihood of harm should the drug reach the patient in error.

The paralytic that took the life of Charlene Murphey is in a class of drugs that demands the highest level of safeguarding to prevent them from being retrieved in error. The Institute for Safe Medication Practices issued guidance in 2016 to prevent accidental use of the drug after similar errors occurred in other hospitals.

We can design systems to reasonably prevent errors that fallible humans will make and allow for robust opportunities to detect and correct them when they do.

Applying or not applying constraints is more than a binary “check the box” activity. From an engineering standpoint, these safeguards substantively reduce the likelihood that high-risk drugs will be retrieved in error, moving it from 1 in 1000 to 1 in 10,000 or perhaps 1 in 100,000. For reasons that remain unknown, it appears the drug that claimed Ms. Murphey’s life was available to nurses in the unit where Vaught practiced using the same keystrokes used to obtain any drug on override.

It's important to recognize that the risk of an adverse, even tragic event, will never be zero, but it can be reduced, and when combined with downstream defenses — team-based activities like two-person double checks and barcode scanning — can reach patients so infrequently that we might be tempted to call them “never events.”

Engineering safety means we cease questing for clinicians who will never err, who will never be inexperienced, who will never be distracted, who will never be tired. Instead we can design systems to reasonably prevent errors that fallible humans will make and allow for robust opportunities to detect and correct them when they do.

In my own work in safety, I don't want to be remembered for telling workers what they shouldn't do. I want to be remembered for saying there's a better way to do it and this is what it looks like. The patient safety community has many achievements to its credit but I’m concerned for the future. The analysis of the individual-within-a-complex system I’ve just provided could have been and was written in the aftermath of the tragic death of Betsy Lehman decades ago.

We're not going to get to the next generation of reliable performance one procedure or one evidence-based bundle at a time. We also won’t get there without appreciating the important, but not limitless, contributions of skilled and dedicated clinicians who are as fallible today as they ever were and, in the aftermath of a worldwide pandemic, exhausted.

It’s time to infuse our action plans — nationally and at the local level — with teachable, learnable strategies and measure the reliability of our key processes of care like an engineer.

Further resources

- Lessons Learned about Human Fallibility, System Design, and Justice After a Fatal Medication Error

A webinar from the Institute for Safe Medication Practices and The Just Culture Company - Let's focus on safety systems improvement, not individual blame

Letter from Barbara Fain, Executive Director, Betsy Lehman Center for Patient Safety